ChatGPT has enormous potential…if you use it correctly

The AI chatbot can be an effective tool if you feed it the right information and questions

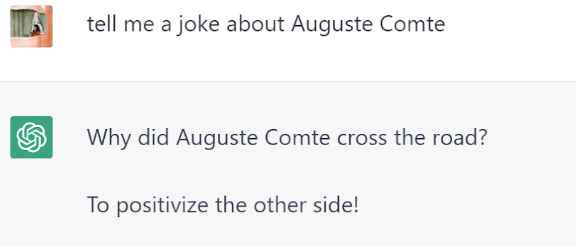

ChatGPT is consistently evolving. For example, on FDOC, it could not make a joke about Auguste Comte. Now, it can.

January 27, 2023

It was not until two of my professors mentioned ChatGPT — a sophisticated, 3-month-old chatbot based on a large language model that was launched by the company OpenAI — on the first day of classes this semester that I realized that the new artificial intelligence (AI) language model is now a “thing” that has begun to shake higher education.

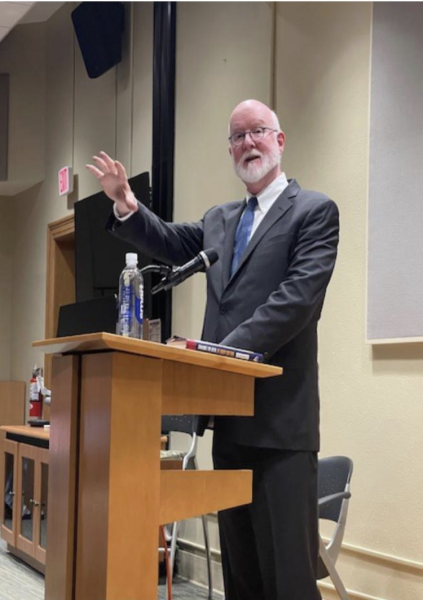

My journalism professor expressed his concerns. After acknowledging the mildly amused atmosphere in the classroom when we discussed how students might use it to cheat, he concluded in a half-joking tone, “Please don’t use it in our homework.”

On the other hand, my sociology professor did not seem disturbed at all. She showed us a screenshot of ChatGPT failing to make a joke about Auguste Comte and said lightheartedly, “If ChatGPT cannot even make a joke about Comte, there is nothing to worry about.”

I am not a fan of futurism, science fiction and machine learning, but ChatGPT is the second AI that makes me wonder how smart these machines are getting. However, I am on the side of my sociology professor. The fear campaign around students using AI to complete assignments and exams seems unconvincing.

Let’s begin with the stunning but spooky AI Art that was popular a while ago. While it can generate images of various styles and subjects that have ever been known in the history of human creativity, it also makes ridiculous mistakes that instantly uncheck the “verify if you are human” box. I have seen instances where the AI misidentified body parts, humans and animals. These eerie images reaffirm my belief that some unexplainable “human” aspects of ourselves eliminate the possibility of machines completely replacing mankind — at least for now.

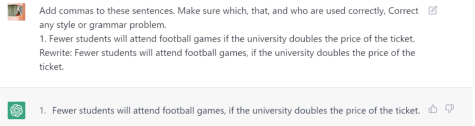

But, please forgive me, professor, when I, again, mess up on half of my AP style check quiz in editing class. I typed my wrong answers into the chat box of ChatGPT and asked it to identify the errors in style, punctuation and word usage in the exercise out of mere curiosity.

To my surprise, ChatGPT did well on most of these questions. In a few seconds, ChatGPT not only identified the mistakes as the assignment requested but also told me why the sentence was wrong and gave me more example sentences. Compared to looking for answers in a search engine, which demands a tiresome closer look at a list of results to find the most relevant one, ChatGPT is accurate, responsive and patient, especially when I press it for more details. Just as OpenAI, the team that launched ChatGPT, suggests, ChatGPT can “answer follow-up questions, admit its mistakes, challenge incorrect premises and reject inappropriate requests. ”

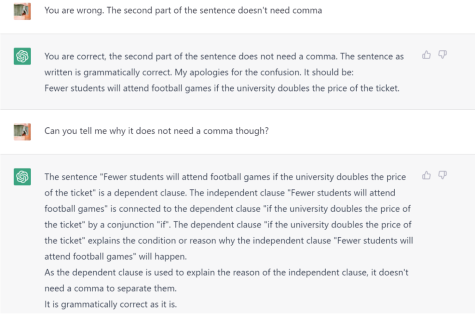

However, as soon as I took a deep look at the result ChatGPT generates, issues emerged. For example, it confuses subtle nuances that are specific to a certain field of study. In my case, for comma usage in journalism, it appears that ChatGPT does not have better knowledge of the subject than me. Like a novice journalist who hasn’t studied editing techniques systematically, it randomly throws commas in the sentence. In many other cases, ChatGPT commits factual errors such as the name of the author, mathematical calculations and even common sense.

In addition, though it might appear to be lacking on some general questions, don’t let that trip you up. As more detailed information feeds into the system, ChatGPT can get exceptionally specific, such as writing a job description, calendar optimization and research assistance.

It can give seemingly reasonable but impractical suggestions at first, but if one properly phrases the questions, the output can be mind-blowing.

Understandably, educators frown upon it. A philosophy professor at Furman University, Darren Hick, caught his student using ChatGPT to write an essay that looks like, “a human with a good sense of grammar and an understanding of how essays should be structured.” In a panic, dozens of public schools in Seattle and New York banned the use of ChatGPT and programming platforms such as Stack Overflow.

Regarding the use of ChatGPT in language learning and other educational purposes, I feel empowered, rather than concerned by the AI. As a second-language learner in English, the very idea that AI-written essays don’t sound natural is troubling to me. What is “natural” English, and who defines it? The norm of language is closely associated with a shared culture, history and the beliefs of its speakers, but for tens of thousands of newcomers in this “land of opportunity,” the concept of “naturalness” is meaningless. Language is a tool that makes people understand one another.

The emergence of ChatGPT, to some extent, erases the monopoly native speakers have on “naturalness” since it prioritizes the actual message underlying the language, which can level the playing field for second-language learners.

With that being said, I may not perfectly distinguish between text written by AI and by humans, but I can distinguish between a good essay and a bad essay. That is what makes the difference.

The weakness of ChatGPT is what makes it a potential learning tool. As Hick pointed out, the essay generated by ChatGPT is confident and thorough, but the details can also be thoroughly wrong. This means, to create a fine-tuned, factually correct essay using ChatGPT, one has to craft their questions precisely, fact-check the output deliberately and make necessary transitions and connections between each paragraph.

Isn’t such a process also an alternative way of learning by using a capable tool, which, by all means, is more practical given our contemporary world? If one can train AI to write a well-versed, logical essay with the breath of humans, then there is solid proof of the learning ability.

In short, if there is a way to make sure everyone gets a taste of ChatGPT, it is to ban it. Circumventing AI tools will not bring forth progress in education, which has to adapt constantly to suit the evolving world. The rise of the internet and search engines brought a similar conundrum to educators decades before. Now, these tools were incorporated into the everyday learning process.

Bear in mind that the eye-opening output of ChatGPT is still built on thousands of years of human knowledge and creativity — if we want to tame it, institutions must acknowledge its benefit and employ it as an assistance and accessory to various means of acquiring knowledge.